Compression - what is the right API to use?

September 1, 2014 . Comments

Tags: java Compression SNAPI LZ4 GZIP metrics ZLIB

Compression is used by a system to support many of the below advantages

Trying to compress and uncompress large number of bytes is counter productive so we would try to find a high bar and stick with that to understand the boundaries.

There are two flavors of famous compression algorithms

Huffman (Tree Based) - Inflate/Defelate, GZip Lempel-ziv compression (Dictionary based) - LZ4, LZF, Snappy

Now for some metrics on the different code that can be used for compression and de-compression using different API. In the below examples we will be only looking at the fastest compression/de-compression with the different API available with their speed and size of compression.

GZip code -

Snappy code -

LZF - ning compress code -

Deflator/Inflator code -

LZ4 compression

common code used by all the above API's -

Let us see different performance and compression metrics of the different flavors listed above and their advantages

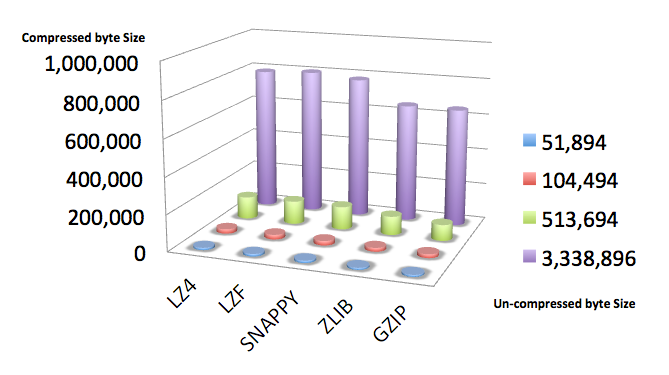

Below graph shows the size of compression for different compression algorithms.

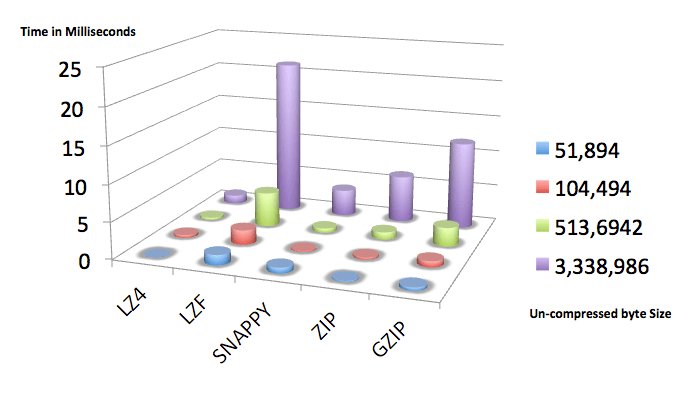

Below graph shows the time taken in underseconds but displayed in milliseconds for un-compressing bytes based on the size

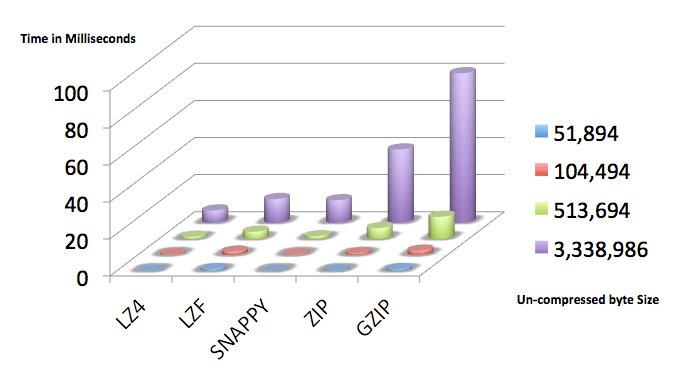

Below graph shows the time taken in underseconds but displayed in milliseconds for compression based on the size

My experience on compression API is all the implementations that are stream based are too slow as they will have to use frequent allocation of memory bytes which results in frequent movement of bytes to resize arrays hence too much wastage of memory as well as processing. It is always better to preallocate in the begining and then shrink the buffer if needed resulting in speed of processing.

total compression and uncompression time should be close to O(k) + O(n) + O(t)

Compression using huffman algorithm is slow as it has to build the tree structure that represents the total buffer.

Based on the above metrics The order of preference for compression based on size would be

The order of preference for time taken for compression/decompression would be

LZ4 is the clear winner when it comes to speed and next winner is deflate/inflate API when saving space.

We need to be careful while choosing Deflate/Inflate as we might not get the bang for while using a slow server, we may spend too much time on compression rather than network time if we use deflate/inflate compressions. Banyan can be configured to use LZ4, Snappy, Deflate/Inflate algorithms.

Feel free to comment on the post but keep it clean and on topic.